Let us take the word count example, where we will be writing a MapReduce job to count the number of words in a file. i.e. How many times a particular word is repeated in the file.

Let's consider the below text file :

For our simpler understanding we have taken a text file of small size. So, if we consider this file is broken into chunks and is distributed across the Nodes A, B, C and D in the cluster.

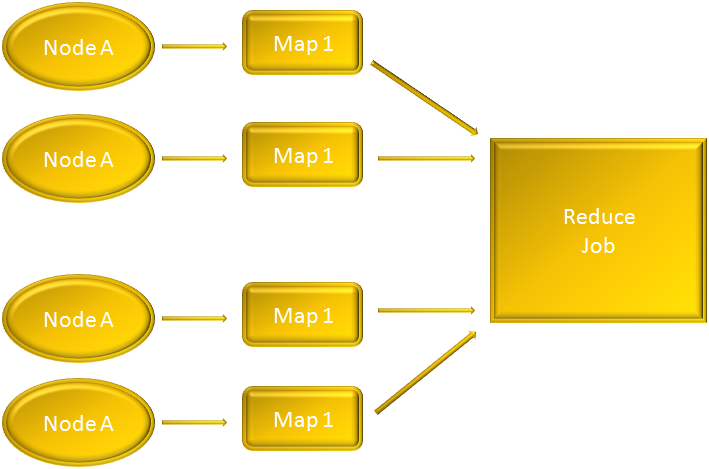

In the above scenario the file is distributed across Node A, B, C, D and in each Node a Map job runs. i.e. Map1, Map2, Map3, Map4.

Since, the above example is for word count, each Map is responsible for counting the words of it's own Node. And we have seen the output of the Map is in key and value pair. Where the key is the actual word and the value is the count.

For Node A, the Map1 output in key and value pair is going to be :

Similarly for Node B, the Map2 output is going to be :

Same logic applies for Node C and Node D.

So, all the Maps have produced the output in key value pairs and the output is sent to the reducer.

Now, the reducer is going to take the keys from all the Mapper outputs and will combine their values.

To get a clear picture, let us match the above output of the reducer with the mapper outputs.

For Node A, the Map1 output was :

{In ,1}

And for Node B, the Map2 output was also :

{In ,1}

Now, the reducer combines both the keys and adds the values. So, you get :

{In ,2}

Similarly all other keys are combines and you get the desired word count in key value pair.